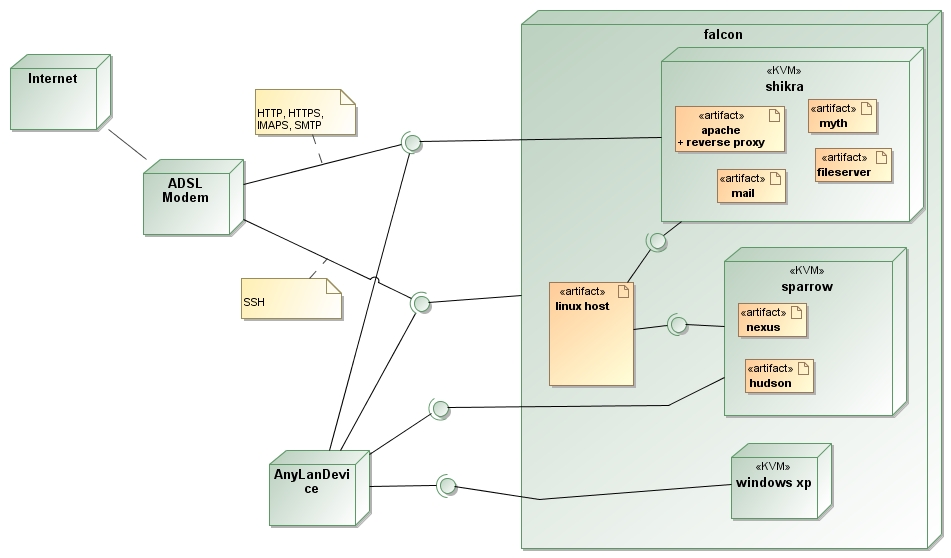

Over the past weeks I have spent huge amounts of time in setting up the new server, making sure that I preserve the complete functionality that I had before. My aim was to take my old server (i.e. server installation) which is 32 bit and run that one as one of the guests on the new server (64 bit) with the guest upgraded to 64 bit as well.

Experimentation with KVM

This turned out to be no easy task. For one, I had to do a lot of experimentation with KVM do get to know it and do benchmarking to determine what the server setup should be like. This was done on the laptop. Then the parts for the server arrived and then I had to assemble it. This was in fact easier than I thought, but this also involved a lot of stability testing with in particular trying to determine whether or not I had a cooling problem (turned out everything was cool from the start).

PCI Passthrough of the TV card

Then the next phase of the server setup started and I tried to use PCI passthrough of my wintv PVR-500 card to a VM, which failed horribly because of shared IRQs. I even ordered and tried out other TV cards that were based on USB. The first card I ordered did not work because it turned out it had hardware that was not supported (this was unexpected since the card was listed as supported but the manufacturer changed the hardware specs without changing the product number). Then I got one that actually worked, including USB passthrough to the guest. However, that resulted in jerky video and audio because, as it turns out, KVM only supports USB 1.1 which is too slow for this. Looking around a little it turns out that in virtualization USB 2.0 is still hot and usually found only in commercial offerings. I even tried Xen again but quickly gave up on that because of its huge complexity.

Stability Problems

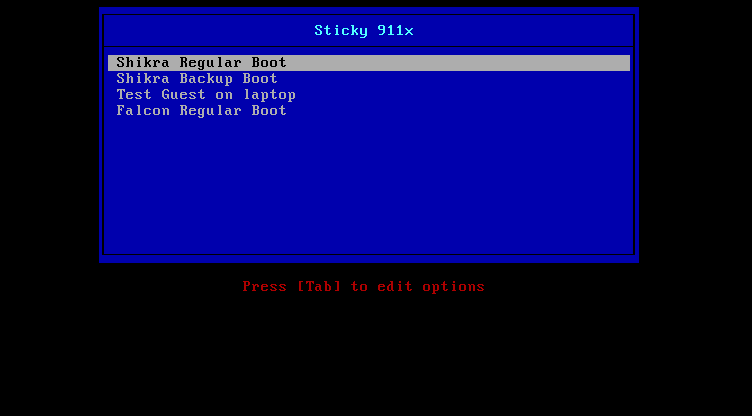

To make progress I decided I might just as well run the old server non-virtualized and use KVM to add other guests on top of that. So I setup the server in this way and installed it in the server rack. Then, the next problems occurred. The server didn’t even survive one night of running a backup and was dead in the morning. The next attempt at a backup also failed. Then I looked into the BIOS event log and I saw loads of messages occurring (at least 20 per hour). This was no good, but with help of customer support from supermicro a new firmware install for the IPMI and BIOS removed most of the events.

Still I got a lot of events, mostly about fans, which was no good. Then I remembered that I had used linux fancontrol to control fan speed. But as it turned out this resulted in conflicts with the IPMI which was also monitoring fan speed. Disabling the fan control again provided a great improvement but still events occured a couple of times per day. A closer look revealed that this occurred at exactly 10 minute boundaries which as exactly the interval with which I was monitoring the system using linux sensors with the w83795 driver. As it turns out, it is impossible to read sensor settings concurrently and this resulted in strange fan readings at some points in time. Disabling the sensors style monitoring fixed that problem as well. So after all of this I had a stable server.

PCI Passthrough Solved

Then, still not satisfied with the crummy server setup with the old server running as host and not as a guest, I was discussing my issue with people on the KVM mailing list. Luckily that provided some suggestions on how to solve it. It turnes out that shared IRQs between the ivtv driver and USB and serial ATA were the cause of the problem. However, it is possible to unbind USB PCI devices in linux and using this I could remove shared IRQ conflicts between ivts and USB. This resulted in a succesful PCI passthrough of on of the tuners on the PVR-500 card but not the other one because of a shared IRQ with ATA.

Again a number of days later I got the idea to look at SATA configuration in the BIOS and it turns out I could configure AHCI or Intel RAID instead of the default SATA and that effectively removed the conflicts with ATA resulting in a succesful PCI passthrough of both tuners on the TV card. So, after all of this I could run the old 32 bit server purely virtualized.

Other Challenges

However running a virtualized server provides some challenges such as automated start, stop, network configuratino, firewalling, and backup. Backup in particular was a challenge because it is impossible to add a new disk to a running server and I wanted to reuse my existing backup solution. To do this, USB was not a solution because of the limitations with 1.1. Luckily howeve iSCSI works quite well and provided exactly what I needed. The only thing was that the linux community apparently changed their minds on the iscsi target implementation so I had to get to no tgtd instead of iscsi-target. Even though that one was designed to be easier to configure the command-line was still challenging enough so I wrote some scripts to make it even easier for myself.

The End

So now I have everything running the way I wanted to from the start. Feels good!